Welcome to the second edition of the AI4India Weekly, your premier source for the latest developments and exciting insights from the rapidly evolving field of artificial intelligence. As we delve into this week's edition, we're excited to present a comprehensive analysis of the ongoing competition among the industry's leading tech giants, exploring the profound impacts of their innovations on technology and society. From OpenAI's groundbreaking advancements to Google's strategic strides, our feature article by Omkar Patil offers an in-depth look at the "Clash of the Titans" that is shaping the future of AI.

In this edition, we also highlight significant national initiatives and technological strides within the field of AI in our news section of the newsletter. Stay tuned for these stories and more as we navigate the latest in AI advancements and their implications for India and beyond.

Clash of the Titans: The Battle for AI Supremacy

by Omkar Patil

The technology industry is a battlefield where giants clash and shape history. From the early days of pioneers like Bill Gates and Steve Jobs to the recent rivalry between Sam Altman and Sundar Pichai, these titans have driven monumental changes in technology and, consequently, have had a profound impact on humanity.

In the realm of Artificial Intelligence, OpenAI and Google have recently engaged in a fierce battle for dominance, utilizing Large Language Models as their primary weapons. In the last decade, the focus was on Vision-based models, with advancements from Imagenet to Convolutional Neural Networks. However, Language modeling remained relatively stagnant, stuck between Bag of Words and TFIDF. Google disrupted this landscape with the introduction of Transformers, a groundbreaking invention detailed in their "Attention is All You Need" paper. Despite capturing widespread attention, operationalizing Transformers posed significant challenges. While this invention languished within Google's bureaucratic structure, Ilya Sutskever and Sam Altman at OpenAI recognized its immense potential and committed substantial resources to its development

OpenAI's initial hesitation to disclose their significant breakthroughs stemmed from concerns about potential misuse for nefarious purposes. However, the eventual release of GPT-2 marked a departure from the encoder-decoder architecture typically employed in transformer models. The OpenAI team discovered that the decoder component was more scalable compared to the encoder-decoder architecture, where cross-attention calculations incurred significant computational costs.

To understand LLM pretraining process, let's rewind. From a practical standpoint, pretraining LLM still resembles alchemy. As the CEO of an AI company, you oversee a fleet of GPUs dedicated to research and development. You divide the research GPUs among your tech wizards, hoping that your most talented engineers, armed with their share of GPUs, will discover the magical combination of hyperparameters that will yield the State of the Art (SOTA) LLM.

In 2023, the race to develop the most advanced LLM intensified as companies like OpenAI, Google, and Meta invested heavily in their efforts. Using their respective resources, such as GPUs and TPUs, these tech giants attempted to create a model that would surpass the current SOTA. In the beginning of 2023, OpenAI struck gold with the release of GPT-4, which demonstrated remarkable capabilities such as generating creative content and performing complex tasks. This development marked a defining moment in the field of natural language processing (NLP) and served as a wake-up call for tech companies worldwide. Consequently, venture capitalists and founders around the world became dependent on OpenAI's technology, recognizing its transformative potential. However, the steep costs associated with accessing GPT-4 presented a challenge for many organizations, creating a dependence on OpenAI's exclusive offering.

As Google struggled to make its move, Meta boldly announced the creation of an open-source LLM called Llama. Although it lagged behind GPT-3.5, Llama 1 brought a breath of fresh air for startup founders like me, who envisioned an alternative universe free from the limitations imposed by OpenAI's offerings. Within days after the Llama release, Georgi Gerganov from Bulgaria developed an entire LLM inference engine in C++ and open-sourced it as Llama.cpp. This breakthrough eliminated the need for expensive GPU-based systems and Pythonic dependencies, making it possible to run a LLM on a regular laptop without any setup. The tech industry responded enthusiastically, with numerous startups releasing their own fine tuned versions of Llama (aka Alpaca, Vicuna) trained on specific datasets with Huggingface as a central point of distribution. However, while these fine tuned models proved to be extremely accurate in individual cases, they still could not match generalized capabilities of OpenAI models.

Over the next few months, Anthropic released their Claude LLM, prompting Google to respond with Gemini but still falling short of OpenAI's unbeaten GPT-4. However, a relatively unknown French startup, Mistral.AI, made significant progress by releasing models comparable to GPT-3.5, shifting the competitive landscape and making GPT-4 level models appear more attainable. Google’s Gemini 1.5 Pro and its 1 Million token context window further fueled speculation. Ultimately, Anthropic broke through the barrier, surpassing GPT-4 with Claude 3 Opus. This achievement required over a year of effort and investments from Amazon and Google.

The past year witnessed a series of boardroom dramas, firings, and rehirings of prominent figures in the tech industry, that could fuel the creation of a captivating movie or even a full season of Silicon Valley. While these events were undoubtedly intriguing, let's shift our focus to the current technical landscape to assess the positions of OpenAI, Google, and Meta. Large Language Models (LLMs) operate on the principle of compression, similar to zipping and unzipping a text file. However, LLMs are unable to perfectly reproduce the training data, causing various side effects including hallucination and inability to explain the source of the data. As human-generated text data in the world is not increasing exponentially, the abilities of LLM abilities are experiencing a very slow incline instead of linear or exponential growth. Another publication suggests that all deep neural networks are converging towards an ideal representation. Consequently, the significant capability jump witnessed between GPT-3.5 and GPT-4 may not be replicable in the future. Instead, we can anticipate incremental improvements from all players, with diminishing gains in capabilities.

Now that the stage has been set, it's time to examine last week’s events. Earlier, Google I/O's date was announced, but in an unexpected turn of events, OpenAI announced an event scheduled just a day before Google I/O. Sam Altman, Mira Murati, and the OpenAI team showcased their new model, GPT-4 Omni, an impressive offering that combines vision, text, and speech capabilities into their single ChatGPT app. While OpenAI may be analogous to Prometheus, the mythical Greek Titan who gifted fire to humankind, Google stands as a titan of a different sort, akin to Zeus, the king of gods, with a colossal revenue of $280 Billion compared to OpenAI's $2 Billion. Google's approach was gradual and well-structured, as they showcased advancements across multiple Google products, including Google Search and Google Workspaces, rather than relying solely on a single app. OpenAI's SORA competitor Veo and Project Astra, a framework for future generations of human assistants, were also highlighted. Unlike OpenAI, Google took a strategic approach by publishing open-sourced models. This move ensures that the industry's momentum continues, fostering innovation and progress.

The past year has demonstrated that the boundaries of science and technology can be relentlessly pushed through a combination of focused leadership and technological breakthrough. OpenAI, a “non-profit” organization dedicated to the development of safe Artificial General Intelligence, has been at the forefront of this effort since its inception. However, the departure of Ilya Sutskever, OpenAI's Chief Scientist and a strong advocate for "Super Alignment" has raised concerns about the organization's commitment to safety. There is also another question of whether Large Language Models (LLMs) as a technology are losing momentum and need to be supplemented by external search-based innovations such as Mythical Q-Star.

As tech giants like Meta, Anthropic, Google, and OpenAI continue to push the boundaries of science, their innovations have the potential to usher humanity into a new era. However, the ultimate impact of these advancements, whether positive or negative, remains uncertain.

NOTE : The views expressed by the author are his own. AI4India as a forum does not endorse any comments on specific brands, products, platforms or companies.

AI Snapshots of the Week

In the weekly AI Snapshots section, we take our readers through some selected exciting AI-related developments. Here are some of the important pieces of news from last week.

Government of India to procure 10,000 GPUs

Announcing the Indian Government’s plan to invest in the procuring 10,000 GPUs, Amitabh Kant, Former CEO of NITI Aayog and India’s G20 Sherpa, spoke about India’s potential in the field of Artificial Intelligence as the country takes huge strides forward.

Bhashini: Bridging the Language Divide

India's groundbreaking digital initiative, Bhashini, which was launched two years ago, recently showcased its remarkable efficacy through a significant demonstration: during an interaction between Gates Foundation chairman Bill Gates and Prime Minister Narendra Modi, they conversed without interpreters, despite the PM speaking in Hindi. This feat was made possible by equipping Gates with an AI-powered headpiece, facilitating real-time translation of PM Modi's Hindi remarks. Bhashini aims to bridge the language gap by enabling conversations among India's 22 languages using innovative technology. To delve deeper into the intricacies and potential of this project, StratNews Global interviewed Amitabh Nag, CEO of Bhashini. Watch the full interview video here

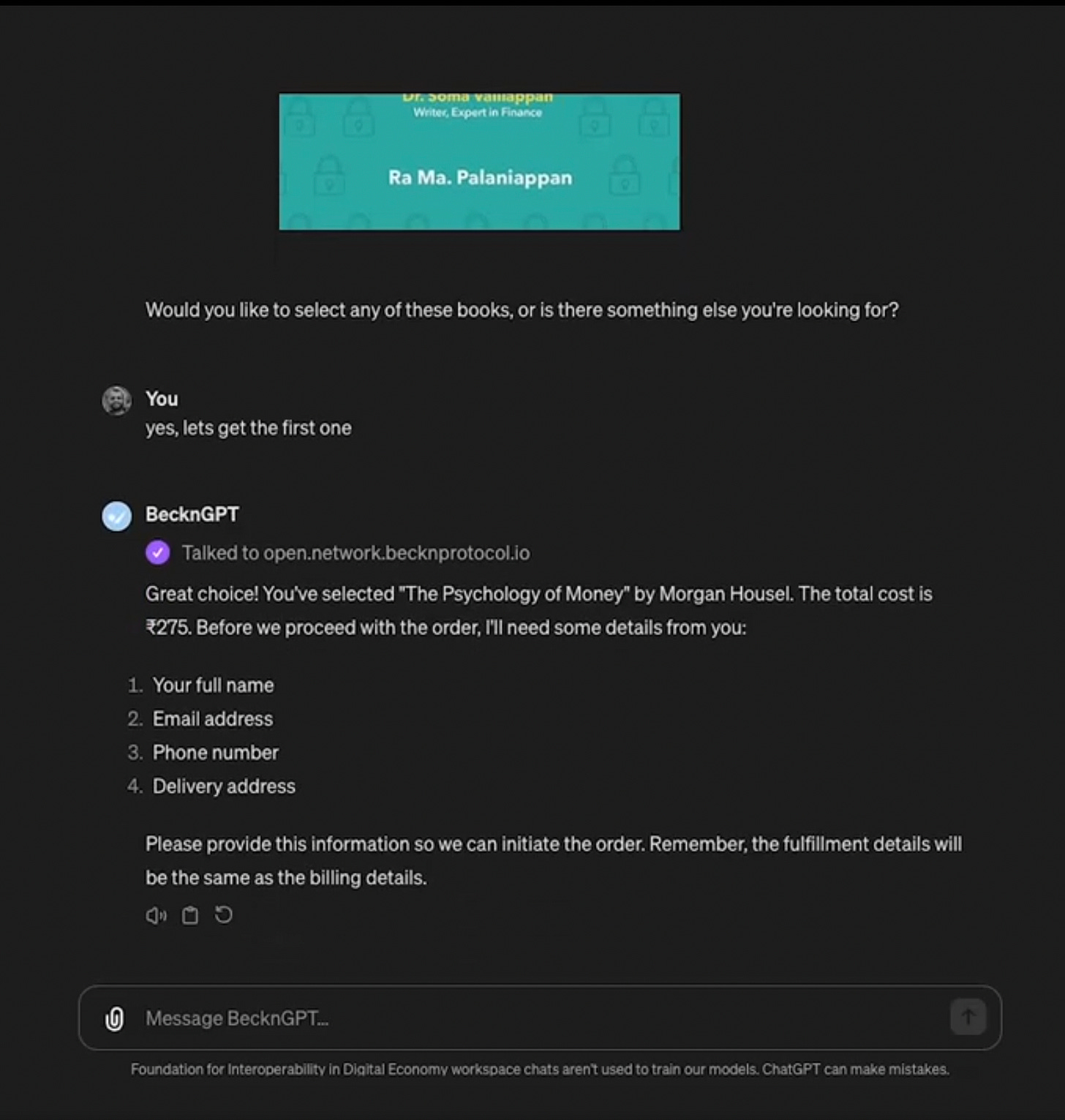

BECKN introduces the Beckn Action Bot - an AI bot that helps you with your shopping

The Beckn Protocol, at the forefront of India's decentralized open protocol movement, facilitates location-aware, local commerce. Beckn works like a digital language that computers use to share information in a way they both understand, much like how websites use HTTP or emails use SMTP. This open-source protocol empowers diverse consumer applications to discover and access services and sales offers across industries, leveraging 5G and high-speed networks to implement e-commerce capabilities effectively. Beckn introduced an AI bot that goes beyond recommendations with respect to e-commerce assistance —it can handle your shopping tasks, marking a significant leap where AI meets Beckn. Watch the Beckn Action Bot demo, showcasing how the protocol and open networks enable AI-driven transactions.

Indian military ramps up AI capabilities

India is significantly enhancing its AI capabilities in the military sector to keep pace with regional powers like China. Despite India's relatively modest AI investment of $50 million annually compared to China's substantial spending, efforts are underway to develop indigenous AI technology for defense applications. This includes robotic systems for tasks such as traversing rugged terrain and scouting, as well as collaborations with international partners to strengthen AI infrastructure and security. Read more

DD Kisan launches two AI Anchors

DD Kisan, the Indian government's dedicated TV channel for farmers, launched two AI anchors named AI Krish and AI Bhoomi on May 26, 2024. These AI anchors, capable of speaking in 50 different languages, will deliver continuous updates on agricultural research, market trends, weather, and government schemes, thereby helping farmers make informed decisions and reach a diverse audience across India and internationally. Read more

Indian sports industry get artificial intelligence boost from IIT Madras

IIT Madras is set to boost the Indian sports industry by integrating advanced artificial intelligence technologies. The institute's AI and data analytics tools, already utilized by cricket teams such as Royal Challengers Bangalore, will now support around 30 sports tech startups annually. This initiative aims to improve player performance, offer sophisticated analytics, and foster innovation in various sports, including chess, boxing, and tennis. Read More

Public Skepticism and Usage Trends of AI-Generated News Across Six Countries

A study by the Reuters Institute examines public perceptions of generative AI in news across six countries, revealing widespread concerns about trust and accuracy. Despite these reservations, many people continue to use AI-generated news content for its convenience and benefits. The findings emphasize the necessity for transparency and reliability in AI journalism to uphold public trust. Read More

Join our AI4India.org forum to be a part of the AI revolution in India by visiting our site now.

Follow us on our X and LinkedIn to receive interesting updates and analysis of AI-related news